Latest in iFLYTEK Speech Technology: Speech Recognition and Speech Synthesis

In the second of our new series on iFLYTEK's research progress, we're spotlighting our advancements in speech recognition and synthesis.

Speech Recognition

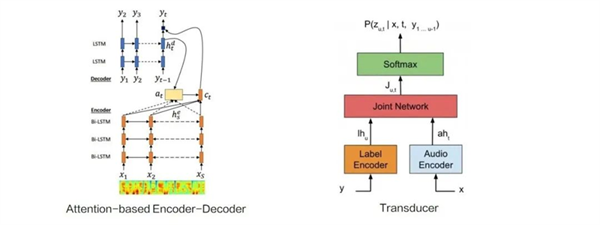

End-to-end modeling based on autoregressive models has become the mainstream framework of speech recognition. It mainly consists of an Attention-based Encoder-Decoder and a Transducer structure which together create a prediction network. The autoregressive approach is equivalent to introducing a language model mechanism into the speech recognition model, which is characterized by the prediction of recognition results requiring a wait for previous recognition results.

However, in large-scale deployment, the nature of autoregression negatively impacts the degree of parallelism and reasoning efficiency, so the iFLYTEK research institute has begun to explore the feasibility of a high-accuracy non-autoregressive framework. CTC (Connectionist temporal classification) is one potential method for accomplishing this.

In CTC modeling of Chinese characters, its hidden layer representation can capture the contextual relationship at the level of Chinese characters, similar to the task of mask recovery or error correction in natural language.

To avoid significant differences between speech and text lengths, the iFLYTEK Research Institute designed a scheme that adds blanks to the text to achieve frame-level expansion. The final effect incorporates pure text data and phonetic CTC data for joint training. The results have proven that it can match or even outperform autoregressive ED and Transducer.

Additionally, the iFLYTEK Research Institute proposes a multi-task learning framework of multi-semantic evaluation to improve speech recognition intelligibility. Some layers are connected after the word-level CTC, and after receiving the word-level representation, intention classification, grammar evaluation, and other identifications are made. iFLYTEK believes this change will soon enable classification of complete sentences by intention.

Voice Synthesis

Great effort has been devoted to building a general framework of speech syntheses, such as end-to-end modeling of VITS (Variational Inference with adversarial learning for end-to-end Text-to-Speech) and prosodic representation.

With the SMART-TTS framework, iFLYTEK aims to modularize the learning process of speech synthesis and strengthen the learning of each module through pre-training, rather than requiring direct mapping between text and acoustic features.

At present, SMART-TTS has been made available on the iFLYTEK open platform and its speech synthesis effect can be experienced on a variety of mobile apps.

For more information about SMART-TTS applications, please visit:

iFLYTEK's SMART Text-To-Speech (SMART-TTS) Imbues AI-created voices with Emotion

In addition to SMART-TTS, the iFLYTEK Research Institute has also made progress in the generation of synthetic virtual sound.

Metaverse technologies are increasingly popular, but challenges persist in outfitting NPC (non-player character) builds with complementary voicing. The same challenge is encountered in audiobook production, where improved virtual voiceover technology could enable more authors to publish audiobook materials.

Virtual timbre generation is a capability that can train a speech synthesis model by combining multiple speakers' voices together. iFLYTEK projects advanced timbre representation into a new hidden layer through the flow model and combine the representation of the space with previous text representation and prosodic representation for speech synthesis.

Training produces a large quantity of speaker data and some speakers' timbre feature tags are marked during training (such as age, gender, and characteristics). At present, the model has been used to generate over 500 virtual synthesized timbres, and the naturalness of synthesized speech exceeds 4.0MOS.

Login

Login Login

Login CCCEU and Gunnercooke Successfully Host Webinar on CSDDD and FLR Compliance to Guide Chinese Businesses

CCCEU and Gunnercooke Successfully Host Webinar on CSDDD and FLR Compliance to Guide Chinese Businesses Cultivating responsible China-EU business leaders essential to tackling global challenges

Cultivating responsible China-EU business leaders essential to tackling global challenges